Abstract

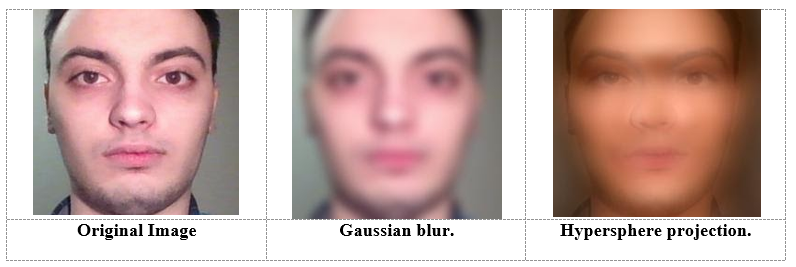

Privacy protection is a very important issue, in the context of social media and GDPR. This lecture overviews the face de-identification problem from an engineering perceptive. In principle, face de-identification methods aim on calculating an affine or a non-linear transformation to an input facial image, so that the depicted person identity is no longer recognized by humans or automated human analysis tools. Traditional applications in the media mainly involve applying additive noise (e.g., pixilation, blurring) or reconstruction-based techniques on the facial image region, achieving sufficient de-identification performance at the expense of corroding image quality. Recently proposed deep learning-based generative methods for face de-identification promise excellent de-identification performance against automated tools while producing visually pleasing yet still not useful images for the human viewers. Finally, adversarial-based face de-identification methods optimally generate the minimum required additive noise that disables automated face detection/recognition systems; thus, the de-identified images maintain maximal utility for human viewers.

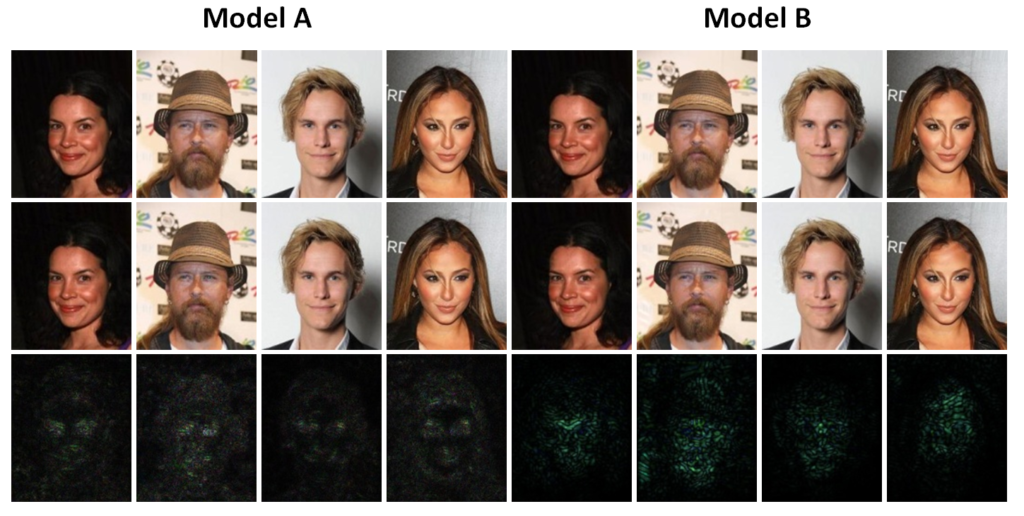

First row: original image; Second row: de-identified image.

Third row: adversarial perturbation absolute value (x10).

Face-De-identification-for-Privacy-Protection-v3.3-Summary