Abstract

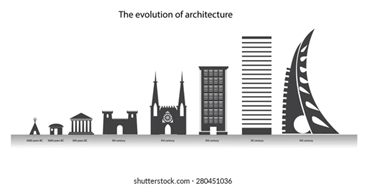

Our world is increasingly complex, in terms of both its material components (e.g., smart cities, infrastructure) and its social processes (e.g., social media outreach). Both individual humans and entire societies find it difficult to cope with world complexity. For example, humans that are overexposed to a 24/7 information deluge through their mobile phones tend to develop the so-called Generalized OnLine Affect and Cognition (GOLAC) disorder. Its impact has not been studied sufficiently well. It can be devastating to minors and vulnerable people. It forms a good substate for conspiracy theories and disinformation.

Artificial Intelligence (AI) in general, and Machine Learning in particular, is our reply to world complexity. It allows us to handle a data flood, analyze data to produce information and use it not only to survive, but also to excel. AI Science and Engineering enable social engineering, allowing us to devise social processes that change our society.

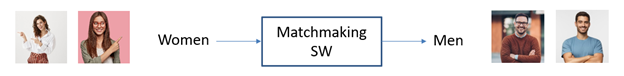

Information filtering is a prime example of social engineering. It encompasses many social processes: a) web search, b) recommendation systems for online product and service marketing, b) online match-making and c) news editing and broadcasting. Though they can have a very positive societal impact, they can also have adverse effects, if poorly implemented. For example, they can result in massive private data theft and use to fuel corporate profits.

Lack of information filtering can look like a heaven for freedom of speech. Yet the opposite frequently happens in social media environments. Irrationalism, cult culture, anti-intellectualism and anti-elitism pre-existed social media. However, social media have unique characteristics (small world phenomenon, rich-get-richer phenomenon, GOLAC disorder) that boost such tendencies and fuel disinformation. Sentimental and conspiratorial speech propagates like wildfire. Why? Social media company policies favor such voices as they ensure user engagement (and their profits through marketing). The end result is that minority voices highjack the web and disinformation flourishes. AI does provide tools, e.g., for deep fake news creation, that can be misused to fuel disinformation and threaten democratic societies. What is the way forward to defend democracy?

This lecture addresses several important questions on the interface between technology and society:

- Why our world becomes ever more complex?

- Can we cope with world complexity?

- What is the relation between freedom of speech and information filtering?

- What is the psychological background of on-line cults and conspiracy theories?

- Why negative views propagate faster?

- What is the relation of irrationalism, and anti-elitism, to social media disinformation?

- How can we valorize our private data?

Bibliography

- I. Pitas, “Artificial Intelligence Science and Society Part A: Introduction to AI Science and Information Technology”

- I. Pitas, “Artificial Intelligence Science and Society Part B: AI Science, Mind and Humans”

- I. Pitas, “Artificial Intelligence Science and Society Part C: AI Science and Society”

- I. Pitas, “Artificial Intelligence Science and Society Part D: AI Science and the Environment”

Figure: Increasing world complexity.

Figure: Information filtering in match-making.

AISE societal impact v1.2