Abstract

This lecture covers the basic concepts and architectures of Multi-Layer Perceptron (MLP), Activation functions, and Universal Approximation Theorem. Training MLP neural networks is presented in detail: Loss types, Gradient descent, Error Backpropagation. Training problems are overviewed, together with solutions, e.g., Stochastic Gradient Descent, Adaptive Learning Rate Algorithms, Regularization, Evaluation, Generalization methods.

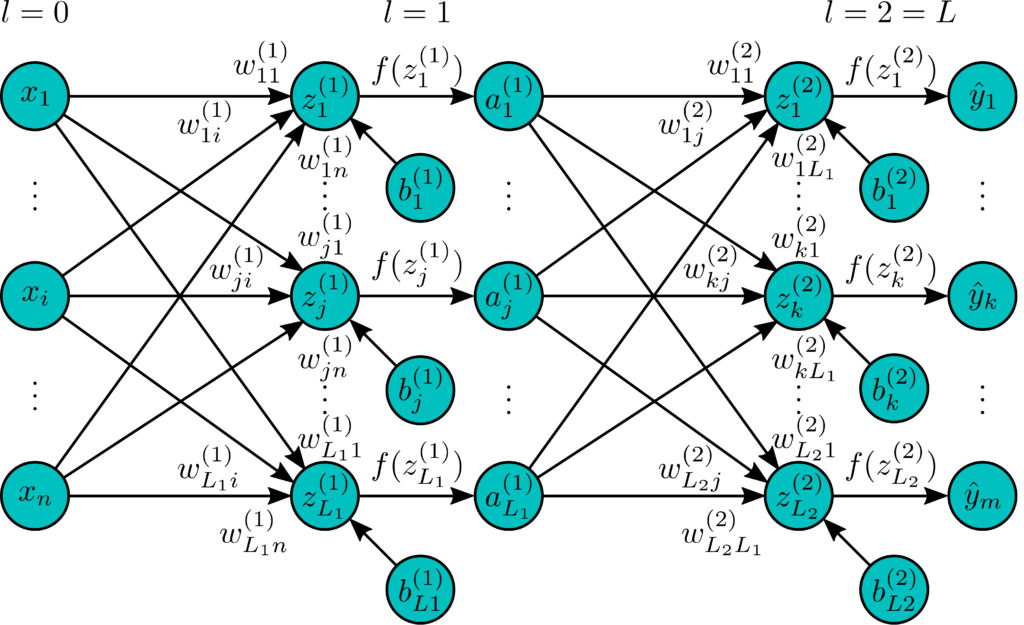

Two-layer MLP.

Steepest gradient descent.

Multilayer-perceptron.-Backpropagation-v4.1-Summary