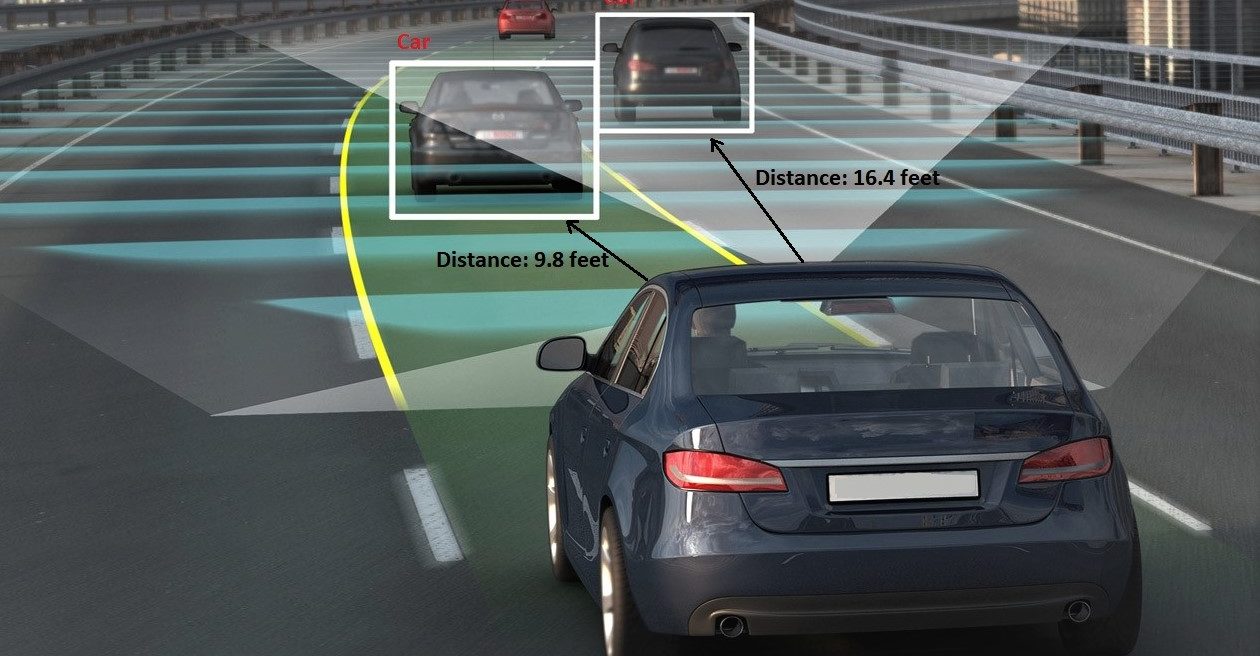

Applications focus on Autonomous/self-driving cars

DESCRIPTION

This two-day short course provides an overview and in-depth presentation of the various computer vision and deep learning problems encountered in Autonomous Systems with applications focusing on autonomous/self-driving cars. It consists of two parts (A, B), each of them includes 8 one-hour lectures.

Part A lectures provide an in-depth presentation to autonomous systems imaging and the relevant architectures as well as a solid background on the necessary topics of computer vision (Image acquisition, camera geometry, Stereo and Multiview imaging, Motion estimation, Mapping and Localization). They also provide an overview of the State-of-the-Art on autonomous car vision and real-time road infrastructure monitoring.

Part B lectures provide an in-depth view of machine learning related topics (Introduction to neural networks, Multilayer NNs/backpropagation, Deep neural networks, Convolution NNs, Deep learning for target detection). They will also cover Deep NN computation optimization schemes based on parallel architectures, GPU programming, and fast convolution algorithms. Furthermore, deep learning for 2D target (e.g., car, pedestrian) detection, tracking and 3D target localization will be detailed. Finally, additional topics related to autonomous car technologies, mainly focusing on car vision will be presented, involving road scene understanding and 3D road surface modeling.

The same machine learning and computer vision problems do occur in any other autonomous systems applications as well, whether done by autonomous cars, drones or marine vessels, e.g., for land/marine surveillance, search&rescue, infrastructure/building inspection and modeling, cinematography.

The course content and exact lecture topics may vary from the above ones depending on recent advances and interests of the audience. If practical programming skills are desired on the above-mentioned topics, the audience may also want to join the short course and workshop on Deep Learning and Computer Vision Programming for Drone Imaging, 28-30/08/2019, Aristotle University of Thessaloniki, that is back to back to this course.

WHEN?

The course will take place on 26-27 August 2019.

WHERE?

The course will take place at KEDEA, 3is Septemvriou – Panepistimioupoli, 54636, Thessaloniki, Greece.

You can find additional information about the city of Thessaloniki and details on how to get the city here.

PROGRAM

| Time*/date | 26/08/2019 | 27/08/2019 |

| 08:00 – 09:00 | Registration | Registration |

| 09:00 – 10:00 | Introduction to autonomous systems imaging | Introduction to neural networks. Perceptron, backpropagation |

| 10:00 – 11:00 | Introduction in computer vision | Deep neural networks. Convolutional NNS |

| 11:00 – 11:30 | Coffee break | Coffee break |

| 11:30 – 12:30 | Image acquisition, camera geometry | Parallel GPU and CPU architectures. GPU programming |

| 12:30 – 13:30 | Stereo and Multiview imaging | Fast convolutions |

| 13:30 – 14:30 | Lunch break | Lunch break |

| 14:30 – 15:30 | Motion estimation | Deep learning for target detection |

| 15:30 – 16:30 | Mapping and localization | 2D Target tracking and 3D localization |

| 16:30 – 17:30 | Introduction to autonomous car vision | Road scene understanding |

| 17:30 – 18:30 | Real-time road infrastructure | |

| 20:00 | Welcome party!!! |

*Eastern European Summer Time (EEST)

LECTURERS

Prof. Ioannis Pitas (IEEE Fellow, IEEE Distinguished Lecturer, EURASIP fellow) received the Diploma and Ph.D. degree in Electrical Engineering, both from the Aristotle University of Thessaloniki, Greece. Since 1994, he has been a Professor at the Department of Informatics of the same University. He served as a Visiting Professor at several Universities. His current interests are in the areas of image/video processing, machine learning, computer vision, intelligent digital media, human-centered interfaces, affective computing, 3D imaging, and biomedical imaging. He is currently leading the big European H2020 R&D project MULTIDRONE. He is also chair of the Autonomous Systems initiative.

Professor Pitas will deliver 12 lectures on deep learning and computer vision.

Rui (Ranger) Fan (member of IEEE, IEEE SPS, EURASIP) received his Bachelor’s degree in Control Science and Engineering in 2015 from the Harbin Institute of Technology, China. In 2018 he received his Ph.D. degree from the Department of Electrical and Electronic Engineering, University of Bristol, England. Since then he has been working as a Research Associate in Robotics and Multi-Perception Laboratory (RAM-LAB), Robotics Institute at the Hong Kong University of Science and Technology (HKUST). Dr. Rui won the best student paper award on 2016 IEEE IST conference. His current interests include 3D reconstruction, multi-view geometry, autonomous driving, high-performance computing, optimization theory, and applications.

Dr. Rui will deliver 4 lectures on computer vision for autonomous cars.

TOPICS

26/08/2019 – Part A (first day, 8 lectures):

1. Introduction to autonomous systems imaging

Abstract: This lecture will provide an introduction and the general context for this new and emerging topic, presenting the aims of autonomous systems imaging and the many issues to be tackled, especially from an image/video analysis point of view as well as the limitations imposed by the system’s hardware. Applications on autonomous cars, drones or marine vessels will be overviewed.

2. Introduction in computer vision

Abstract: A detailed introduction in computer vision will be made, mainly focusing on 3D data types as well as color theory. The basics of color theory will be presented, followed by the several color coordinate systems, and finally, image and video content analysis and sampling will be thoroughly described.

3. Image acquisition, camera geometry

Abstract: After a brief introduction to image acquisition and light reflection, the building blocks of modern cameras will be surveyed, along with geometric camera modeling. Several camera models, like pinhole and weak-perspective camera model, will subsequently be presented, with the most commonly used camera calibration techniques closing the lecture.

4. Stereo and Multiview imaging

Abstract: The workings of stereoscopic and multiview imaging will be explored in depth, focusing mainly on stereoscopic vision, geometry and camera technologies. Subsequently, the main methods of 3D scene reconstruction from stereoscopic video will be described, along with the basics of multiview imaging.

5. Motion estimation

Abstract: Motion estimation principals will be analyzed. Initiating from 2D and 3D motion models displacement estimation, as well as quality metrics for motion estimation, will subsequently be detailed. One of the basic motion techniques, namely block matching, will also be presented, along with three alternative, faster methods. Phase correlation will be described, next, followed by the optical flow equation methods and brief introduction to object detection and tracking.

6. Mapping and localization

Abstract: The lecture includes the essential knowledge about how we obtain/get 2D and/or 3D maps that robots/drones need, taking measurements that allow them to perceive their environment with appropriate sensors. Semantic mapping includes how to add semantic annotations to the maps such as POIs, roads and landing sites. Section Localization is exploited to find the 3D drone or target location based on sensors using specifically Simultaneous Localization And Mapping (SLAM). Finally, drone localization fusion describes improves accuracy on localization and mapping by exploiting the synergies between different sensors.

7. Introduction to autonomous car vision

Abstract: In this lecture, an overview of the autonomous car technologies will be presented (structure, HW/SW, perception), focusing on car vision. The HKUST autonomous vehicle will be presented as a special case, including its sensors and algorithms. Then, an overview of computer vision applications in this autonomous vehicle will be presented, such as visual odometry, lane detection, road segmentation, etc. Also, the current progress of autonomous driving will be introduced.

8. Real-time road infrastructure monitoring

Abstract: The condition assessment of road infrastructure is essential to ensure their serviceability while still providing maximum road traffic safety. This lecture presents an overview of techniques to inspect road infrastructure (pothole, big cracks, structural failures). Several techniques will be surveyed, e.g., based on robust stereo vision using drones. Highly efficient stereo matching, as well as depth image analysis algorithms will be presented. Such algorithms can be easily implemented on the embedded systems such as NVIDIA Jetson TX2 GPU.

27/08/2019 – Part B (second day, 8 lectures):

1.Introduction to neural networks. Perceptron, backpropagation

Abstract: This lecture will cover the basic concepts of neural networks: biological neural models, perceptron, multilayer perceptron, classification, regression, design of neural networks, training neural networks, deployment of neural networks, activation functions, loss types, error backpropagation, regularization, evaluation, generalization.

2. Deep neural networks. Convolutional NNs

Abstract: From multilayer perceptrons to deep architectures. Fully connected layers. Convolutional layers. Tensors and mathematical formulations. Pooling. Training convolutional NNs. Initialization. Data augmentation. Batch Normalization. Dropout. Deployment on embedded systems. Lightweight deep learning.

3. Paraller GPU and multicore CPU architectures. GPU programming

Abstract: In this lecture, various GPU and multicore CPU architectures will be reviewed, used notably in GPU cards and in embedded boards, like NVIDIA TX1, TX2, Xavier. The principles of the parallelization of various algorithms on GPU and multicore CPU architectures are reviewed. Sequentially the essentials of GPU programming are presented. Finally, special attention is paid on: a.) fast and parallel linear algebra operations (e.g., using cuBLAS) and, b.) convolution FFS algorithms, as all of them have particular importance in deep machine learning (CNNs) and in real-time computer vision.

4. Fast convolutions algorithms

Abstract: Two huge factors related to deep neural network models are the amount of time spend on training such models as well as the response time during DNN inference. Many autonomous systems vision-related applications require very low latency during inference. Both aforementioned are associated with how fast we can compute neural network operations, such as 2D and 3D convolutions. Introducing new and fast ways of conducting these operations can boost the computation speed of deep neural networks.

5. Deep learning for target detection

Abstract: Recently, Convolutional Neural Networks (CNNs) have been used for object/target (e.g., car, pedestrian, road sign) detection with great results. However, using such CNN models on embedded processors for real-time processing is prohibited by HW constraints. In that sense, various architectures and settings will be examined in order to facilitate and accelerate the use of embedded CNN-based object detectors with limited computational capabilities. The following target detection topics will be presented: Object detection as search and classification task. Detection as classification and regression task. Modern architectures for target detection (e.g., RCNN, Faster-RCNN, YOLO, SSD). Lightweight architectures. Data augmentation. Deployment. Evaluation and benchmarking.

6.2D Target tracking and 3D target localization

Abstract: Target tracking is a crucial component of many vision systems. Many approaches regarding person/object detection and tracking in videos have been proposed. In this lecture, video tracking methods using correlation filters or convolutional neural networks are presented, focusing on video trackers that are capable of achieving real-time performance for long-term tracking on embedded computing platforms.

7. Road scene understanding

Abstract: In this lecture, the lecturer will first introduce several road scene understanding tasks, i.e., road segmentation, lane detection, car plate description, and pedestrian detection. Then, state-of-the-art algorithms for deep learning-based road segmentation, stereo vision-based lane detection, deep learning-based car plate, and sign detection will be detailed. Some source code will be provided to attendants for experimentation.

8. 3D road surface modelling

Abstract: Various methods can be used for 3D road surface modeling, notably based on stereo vision or LIDARs, producing disparity/depth maps or 3D point clouds, respectively. In this lecture, several such modeling methods will be presented. The calibration between different sensors (LIDAR, camera) will be presented. Furthermore, state-of-the-art depth estimation algorithm based on data fusion will be presented.

AUDIENCE

Any engineer or scientist practicing or student having some knowledge of computer vision and/or machine learning, notable CS, CSE, ECE, EE students, graduates or industry professionals with relevant background.

REGISTRATION

———————————————————————————————————————————————————

Early registration (till 14/07/2019):

• Standard: 300 Euros

• Undergraduate/MSc/PhD students*: 200 Euros

Later or on-site registration (after 14/07/2019):

• Standard: 350 Euros

• Undergraduate/MSc/PhD students*: 250 Euros

———————————————————————————————————————————————————

*Proof of student status should be provided upon registration.

If by clicking the <<Register Now!>> button the page opens in Greek language, please find on the upper right part of the page the option to change it in English. (it is next to the search field)

Remote short course participation is allowed.

Lectures will be in English. PDF slides will be available to course attendees.

A certificate of attendance will be provided.

Cancellation policy:

70% refund for cancellation up to 31/05/201950% refund for cancellation up to 19/07/20190% refund afterwards

IF I HAVE A QUESTION?

SAMPLE COURSE MATERIAL & RELATED LITERATURE

1.) C. Regazzoni, I. Pitas, ‘Perspectives in Autonomous Systems research’, Signal Processing Magazine, September 2019

2.) Call for papers – Special Issue on Autonomous Driving

3.) Artificial neural networks

4.) 3D Shape Reconstruction from 2D Images

5.) Overview of self-driving car technologies

6.) I. Pitas, ‘3D imaging science and technologies’, Amazon CreateSpace preprint, 2019

7.) R. Fan, U. Ozgunalp, B. Hosking, M. Liu, I. Pitas, “Pothole Detection Based on Disparity Transformation and Road Surface Modeling“, IEEE Transactions on Image Processing (accepted for publication 2019).

8.) Rui Fan, Xiao Ai, Naim Dahnoun, “Road Surface 3D Reconstruction Based on Dense Subpixel Disparity Map Estimation“, IEEE Transactions on Image Processing, vol 27, no. 6, June 2018

9.) Umar Ozganalp, Rui Fan, Xiao Ai, Naim Dahnoun, “Multiple Lane Detection Algorithm Based on Novel Dense Vanishing Point Estimation“, IEEE Transactions on Intelligent Transportation Systems, vol. 18, no.3, March 2017

10.) Rui Fan, Jianhao Jiao, Jie Pan, Huaiyang Huang, Shaojie Shen, Ming Liu, “Real-Time Dense Stereo Embedded in A UAV for Road Inspection“, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2019

11.) Semi-Supervised Subclass Support Vector Data Description for image and video classification, V. Mygdalis, A. Iosifidis, A. Tefas, I. Pitas, Neurocomputing, 2017

12.) Neurons With Paraboloid Decision Boundaries for Improved Neural Network Classification Performance, N. Tsapanos, A. Tefas, N. Nikolaidis and I. Pitas IEEE Transactions on Neural Networks and Learning Systems (TNNLS), 14 June 2018, pp 1-11

13.) Convolutional Neural Networks for Visual Information Analysis with Limited Computing Resources, P. Nousi, E. Patsiouras, A. Tefas, I. Pitas, 2018 IEEE International Conference on Image Processing (ICIP), Athens, Greece, October 7-10, 2018

14.) Learning Multi-graph regularization for SVM classification, V. Mygdalis, A. Tefas, I. Pitas, 2018 IEEE International Conference on Image Processing (ICIP), Athens, Greece, October 7-10, 2018

15.) Quality Preserving Face De-Identification Against Deep CNNs, P. Chriskos, R. Zhelev, V. Mygdalis, I. Pitas 2018 IEEE International Workshop on Machine Learning for Signal Processing (MLSP), Aalborg,

Denmark, September 2018

16.) P. Chriskos, O. Zoidi, A. Tefas, and I. Pitas, “De-identifying facial images using singular value decomposition and projections”, Multimedia Tools and Applications, 2016

17.) Deep Convolutional Feature Histograms for Visual Object Tracking, P. Nousi, A. Tefas, I. Pitas, IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2019

18.) Exploiting multiplex data relationships in Support Vector Machines, V. Mygdalis, A. Tefas, I. Pitas, Pattern Recognition 85, pp. 70-77, 2019

USEFUL LINKS

•Prof. Ioannis Pitas: https://scholar.google.gr/citations?user=lWmGADwAAAAJ&hl=el

•Department of Computer Science, Aristotle University of Thessaloniki (AUTH): https://www.csd.auth.gr/en/

•Laboratory of Artificial Intelligence and Information Analysis: http://www.aiia.csd.auth.gr/

•Thessaloniki: https://wikitravel.org/en/Thessaloniki