DESCRIPTION

This two-day short course provides an overview and in-depth presentation of the various computer vision and deep learning problems encountered in autonomous systems perception, e.g. in drone imaging or autonomous car vision. It consists of two parts (A, B) and each of them includes up to 7 one-hour lectures.

Part A lectures (7 hours) provide an in-depth presentation to autonomous systems imaging and the relevant architectures as well as a solid background on the necessary topics of computer vision (Camera geometry, Stereo and Multiview imaging, Introduction to multiple drone systems, Simultaneous Localization and Mapping, Drone mission planning and control, Introduction to autonomous marine vehicles).

Part B lectures (7 hours) provide in-depth views of the various topics of machine learning (Multilayer Perceptron, backpropagation, Deep neural networks, Convolutional NNs, Deep Object Detection, 2D Visual Object Tracking, Neural Slam) encountered in autonomous systems perception, ranging from vehicle localization and mapping, to target detection and tracking, autonomous systems communications and embedded CPU/GPU computing. Part B also contains application-oriented lectures on autonomous drones, cars and marine vessels (e.g. for land/marine surveillance, search&rescue missions, infrastructure/building inspection and modeling, cinematography).

WHEN?

The course will take place on 22-23 August 2022.

WHERE?

All lectures and workshops will be delivered remotely.

The course link is the following: https://authgr.zoom.us/j/93924821331

PROGRAM

| Time*/date | 22/08/2022 | 23/08/2022 |

| 09:30 – 10:00 | Registration | |

| 10:00 – 11:00 | Introduction to autonomous systems | Multilayer perceptron. Backpropagation |

| 11:00 – 12:00 | Camera geometry | Deep neural networks. Convolutional NNs |

| 12:00 – 12:30 | Coffee break | Coffee break |

| 12:30 – 13:30 | Stereo and Multiview imaging | Deep Object Detection |

| 13:30 – 14:30 | Introduction to multiple drone systems | 2D Visual Object Tracking |

| 14:30 – 15:30 | Lunch break | Lunch break |

| 15:30 – 16:30 | Simultaneous Localization and Mapping | Neural Slam |

| 16:30 – 17:30 | Drone mission planning and control | CVML Software development tools |

| 17:30 – 18:00 |

Coffee break |

Coffee break |

| 18:00 – 17:00 |

Introduction to autonomous marine vehicles |

Applications in car vision |

*Eastern European Summer Time (EEST)

**This programme is indicative and may be modified without prior notice by announcing (hopefully small) changes in lectures/lecturers.

***Each topic will include a 45-minute lecture and a 15-minute break.

————————————————————————————————————————————————————————-

REGISTRATION

————————————————————————————————————————————————————————-

Early registration (till 15/07/2022):

• Standard: 300 Euros

• Unemployed or Undergraduate/MSc/PhD student*: 200 Euros

Later or on-site registration (after 15/07/2022):

• Standard: 350 Euros

• Unemployed or Undergraduate/MSc/PhD student*: 250 Euros

*Proof of unemployment or student status should be provided upon registration.

After the completion of your payment, please fill in the form below:

Lectures will be in English. PDF slides will be available to course attendees.

A certificate of attendance will be provided by AUTH upon successful completion of the course.

Cancelation policy:

- 50% refund for cancelation up to 15/07/2022

- 0% refund afterwards

Every effort will be undertaken to run the course as planned. Due to the special COVID-19 circumstances, the organizer (AUTH) reserves right to cancel the event anytime by simple notice to the registrants (by email by announcing it in the course www page). In this case, each registrant will be reimbursed 100% for the registration fee. However, the organizer will be not held liable for any other loss incurred to the registrants.

————————————————————————————————————————————————————————-

LECTURERS

Prof. Ioannis Pitas (IEEE fellow, IEEE Distinguished Lecturer, EURASIP fellow) received the Diploma and PhD degree in Electrical Engineering, both from the Aristotle University of Thessaloniki (AUTH), Greece. Since 1994, he has been a Professor at the Department of Informatics of AUTH and Director of the Artificial Intelligence and Information Analysis (AIIA) lab. He served as a Visiting Professor at several Universities.

His current interests are in the areas of computer vision, machine learning, autonomous systems, intelligent digital media, image/video processing, human-centred computing, affective computing, 3D imaging and biomedical imaging. He has published over 920 papers, contributed to 45 books in his areas of interest and edited or (co-)authored another 11 books. He has also been member of the program committee of many scientific conferences and workshops. In the past he served as Associate Editor or co-Editor of 13 international journals and General or Technical Chair of 5 international conferences. He delivered 98 keynote/invited speeches worldwide. He co-organized 33 conferences and participated in technical committees of 291 conferences. He participated in 71 R&D projects, primarily funded by the European Union and is/was principal investigator in 43 such projects.

He is AUTH principal investigator in H2020 R&D projects Aerial Core, AI4Media (one of the 4 H2020 ICT48 AI flagship projects) and Horizon Europe R&D projects AI4Europe, SIMAR. He is chair of the International AI Doctoral Academy (AIDA) https://www.i-aida.org/. He was chair and initiator of the IEEE Autonomous Systems Initiative https://ieeeasi.signalprocessingsociety.org/. Prof. Pitas lead the big European H2020 R&D project MULTIDRONE: https://multidrone.eu/ He has 34000+ citations to his work and h-index 86+. According to https://research.com/ he is ranked first in Greece and 319 worldwide in the field of Computer Science (2022).

Educational record of Prof. I. Pitas: He was Visiting/Adjunct/Honorary Professor/Researcher and lectured at several Universities: University of Toronto (Canada), University of British Columbia (Canada), EPFL (Switzerland), Chinese Academy of Sciences (China), University of Bristol (UK), Tampere University of Technology (Finland), Yonsei University (Korea), Erlangen-Nurnberg University (Germany), National University of Malaysia, Henan University (China). He delivered 90 invited/keynote lectures in prestigious international Conferences and top Universities worldwide. He run 17 short courses and tutorials on Autonomous Systems, Computer Vision and Machine Learning, most of them in the past 3 years in many countries, e.g., USA, UK, Italy, Finland, Greece, Australia, N. Zealand, Korea, Taiwan, Sri Lanka, Bhutan.

http://www.aiia.csd.auth.gr/LAB_PEOPLE/IPitas.php

https://scholar.google.gr/citations?user=lWmGADwAAAAJ&hl=el

TOPICS

22/08/2028 – Part A (first day, 7 lectures):

1. Introduction to autonomous systems

Abstract: This lecture will provide an introduction and the general context for this new and emerging topic, presenting the aims of autonomous systems imaging and the many issues to be tackled, especially from an image/video analysis point of view as well as the limitations imposed by the system’s hardware. Applications on autonomous cars, drones or marine vessels will be overviewed.

Lecture material: Download

Abstract: After a brief introduction to image acquisition and light reflection, the building blocks of modern cameras will be surveyed, along with geometric camera modeling. Several camera models, like pinhole and weak-perspective camera model, will subsequently be presented, with the most commonly used camera calibration techniques closing the lecture.

3. Stereo and Multiview imaging

Abstract: Stereoscopic and multiview imaging will be explored in depth, as they have tremendous applications in many applications, ranging from autonomous car/drone/robot/vessel vision to Surveying Engineering to Medical Imaging. Focus will be mainly on stereoscopic vision, geometry and camera technologies. Epipolar Geometry, Essential and Fundamental matrix, Stereo rectification are detailed. Subsequently, the main methods of disparity estimation and 3D scene reconstruction from stereoscopic video will be described, together with feature search and matching. Multiview imaging will be overviewed.

4. Introduction to multiple drone systems

Abstract: This lecture will provide the general context for this new and emerging topic, presenting the aims of drone vision, the challenges (especially from an image/video analysis and computer vision point of view), the important issues to be tackled, the limitations imposed by drone hardware, regulations and safety considerations etc. An overview of the use of multiple drones in media production will be made. The three use scenaria, the challenges to be faced and the adopted methodology will be discussed at the first part of the lecture, followed by scenario-specific, media production and system platform requirements. Multiple drone platform will be detailed during the second part of the lecture, beginning with platform hardware overview, issues and requirements and proceeding by discussing safety and privacy protection issues. Finally, platform integration will be the closing topic of the lecture, elaborating on drone mission planning, object detection and tracking, UAV-based cinematography, target pose estimation, privacy protection, ethical and regulatory issues, potential landing site detection, crowd detection, semantic map annotation and simulations.

5. Simultaneous Localization and Mapping

Abstract: The lecture includes the essential knowledge about how we obtain/get 2D and/or 3D maps that robots/drones need, taking measurements that allow them to perceive their environment with appropriate sensors. Semantic mapping includes how to add semantic annotations to the maps such as POIs, roads and landing sites. Section Localization is exploited to find the 3D drone or target location based on sensors using specifically Simultaneous Localization and Mapping (SLAM). Finally, drone localization fusion describes improves accuracy on localization and mapping by exploiting the synergies between different sensors.

6. Drone mission planning and control

Abstract: In this lecture, first the audiovisual shooting mission is formally defined. The introduced audiovisual shooting definitions are encoded in mission planning comannds, i.e., navigation and shooting action vocabulary, and their corresponding parameters. The drone mission commands, as well as the hardware/software architecture required for manual/autonomous mission execution are described. The software infrastructure includes the planning modules, that assign, monitor and schedule different behaviours/tasks to the drone swarm team according to director and enviromental requirements, and the control modules, which execute the planning mission by translating high-level commands to intro desired drone+camera configurations, producing commands for autopilot, camera and gimbal of the drone swarm.

7. Introduction to autonomous marine vehicles

Abstract: Autonomous marive vehicles can be described as surface (boats, ships) and unterwater ones (submarines). They have many applications in marine transportations, marine/submarine surveillance and many challenges in environment perception/mapping and vehicle control, to be reviewed in this lecture.

23/08/2022 – Part B (second day, 7 lectures):

1. Multilayer perception. Backpropagation

Abstract: This lecture covers the basic concepts and architectures of Multi-Layer Perceptron (MLP), Activation functions, and Universal Approximation Theorem. Training MLP neural networks is presented in detail: Loss types, Gradient descent, Error Backpropagation. Training problems are overviewed, together with solutions, e.g., Stochastic Gradient Descent, Adaptive Learning Rate Algorithms, Regularization, Evaluation, Generalization methods.

2. Deep neural networks. Convolutional NNs

Abstract: From multilayer perceptrons to deep architectures. Fully connected layers. Convolutional layers. Tensors and mathematical formulations. Pooling. Training convolutional NNs. Initialization. Data augmentation. Batch Normalization. Dropout. Deployment on embedded systems. Lightweight deep learning.

Sample Lecture material: Download

Abstract: Recently, Convolutional Neural Networks (CNNs) have been used for object/target (e.g., face, person, car, pedestrian, road sign) detection with great results. However, using such CNN models on embedded processors for real-time processing is prohibited by HW constraints. In that sense, various architectures and settings will be examined in order to facilitate and accelerate the use of embedded CNN-based object detectors with limited computational capabilities. The following target detection topics will be presented: Object detection as search and classification task. Detection as classification and regression task. Modern architectures for target detection (e.g., RCNN, Faster-RCNN, YOLO v4, SSD, RetinaNet, RBFNet, CornerNet, CenterNet, DETR), Lightweight architectures, Data augmentation and Deployment are presented in detail. Evaluation and benchmarking measures are detailed.

Abstract: Object/Target tracking is a crucial component of many vision systems. Object tracking issues are overviewed, e.g., occlusion handling, feature loss, drifting to the backgound. Many approaches regarding person/object detection and tracking in videos have been proposed. In this lecture, video tracking methods using correlation filters or convolutional neural networks are presented, focusing on video trackers that are capable of achieving real-time performance for long-term tracking on embedded computing platforms. Joint object detection and tracking methods are detailed. Tracking performance metrics are overviewed.

5. Neural Slam

Abstract: This lecture overviews Neural SLAM that has many applications in robotic and autonomous vehicle localization and mapping. It covers the following topics in detail: Neural Camera Calibration, Neural Mapping/Reconstruction, Neural Localization, Neural SfM, Neural SLAM.

6. CVML Software development tools

Abstract: This lecture overviews the various SW tools, libraries and environments used in computer vision and machine learning: Robotic Operating System (ROS). Libraries (OpenCV, BLAS, cuBLAS, MKL DNN, cuDNN), DNN Frameworks (Neon, Tensorflow, PyTorch, Keras, MXNet), Distributed/cloud computing (MapReduce programming model, Apache Spark), Collaborative SW Development tools (GitHub, Bitbucket).

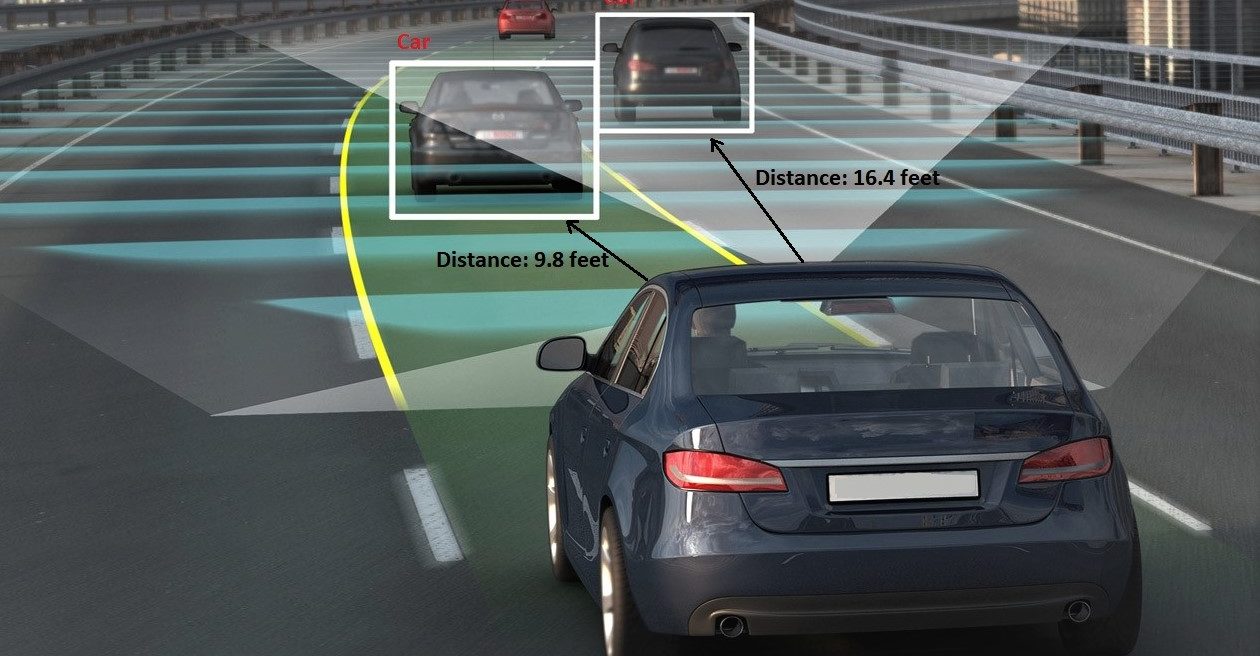

Abstract: In this lecture, an overview of the autonomous car technologies will be presented (structure, HW/SW, perception), focusing on car vision. Examples of autonomous vehicle will be presented as a special case, including its sensors and algorithms. Then, an overview of computer vision applications in this autonomous vehicle will be presented, such as visual odometry, lane detection, road segmentation, etc. Also, the current progress of autonomous driving will be introduced.

AUDIENCE

Any engineer or scientist practicing or student having some knowledge of computer vision and/or machine learning, notable CS, CSE, ECE, EE students, graduates or industry professionals with relevant background.

IF I HAVE A QUESTION?

Feel free to contact us for any further information with email to Ioanna Koroni (koroniioanna@csd.auth.gr) with subject “Short Course 2022”.

PAST COURSE EDITION

——————————————————————————————————————

2020

Participants: 21

Countries: Belgium, Ireland, Greece, Finland, China, Italy, France, Croatia, Spain, United Kingdom

Registrants comments:

- “Very interesting lecture topics regarding the autonomous systems perception.”

- The overall course was quite interesting and fulfilling in terms of the context promised.”

- “The lectures were very appealing and satisfactorily delivered.”

——————————————————————————————————————

Participants: 39

Countries: United Kingdom, Scotland, Germany, Italy, Norway, Slovakia, Spain, Croatia, Czech Republic, Greece

Registrants comments:

- “Very adequate information about the topics of DL, CV and autonomous systems.”

- “Very good coverage of autonomous systems vision perception.”

- “Course’s content was greatly explanatory with many application examples.”

- “Very well structured course, knowledgeable lecturers.”

——————————————————————————————————————

SPONSORS

If you want to be our sponsor send us an email here: koroniioanna@csd.auth.gr

SAMPLE COURSE MATERIAL & RELATED LITERATURE

1.) C. Regazzoni, I. Pitas, ‘Perspectives in Autonomous Systems research’, Signal Processing Magazine, September 2019

2.) Artificial neural networks

3.) 3D Shape Reconstruction from 2D Images

4.) Overview of self-driving car technologies

5.) I. Pitas, ‘3D imaging science and technologies’, Amazon CreateSpace preprint, 2019

6.) R. Fan, U. Ozgunalp, B. Hosking, M. Liu, I. Pitas, “Pothole Detection Based on Disparity Transformation and Road Surface Modeling“, IEEE Transactions on Image Processing (accepted for publication 2019).

7.) Rui Fan, Xiao Ai, Naim Dahnoun, “Road Surface 3D Reconstruction Based on Dense Subpixel Disparity Map Estimation“, IEEE Transactions on Image Processing, vol 27, no. 6, June 2018

8.) Umar Ozganalp, Rui Fan, Xiao Ai, Naim Dahnoun, “Multiple Lane Detection Algorithm Based on Novel Dense Vanishing Point Estimation“, IEEE Transactions on Intelligent Transportation Systems, vol. 18, no.3, March 2017

9.) Rui Fan, Jianhao Jiao, Jie Pan, Huaiyang Huang, Shaojie Shen, Ming Liu, “Real-Time Dense Stereo Embedded in A UAV for Road Inspection“, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2019

10.) Semi-Supervised Subclass Support Vector Data Description for image and video classification, V. Mygdalis, A. Iosifidis, A. Tefas, I. Pitas, Neurocomputing, 2017

11.) Neurons With Paraboloid Decision Boundaries for Improved Neural Network Classification Performance, N. Tsapanos, A. Tefas, N. Nikolaidis and I. Pitas IEEE Transactions on Neural Networks and Learning Systems (TNNLS), 14 June 2018, pp 1-11

12.) Convolutional Neural Networks for Visual Information Analysis with Limited Computing Resources, P. Nousi, E. Patsiouras, A. Tefas, I. Pitas, 2018 IEEE International Conference on Image Processing (ICIP), Athens, Greece, October 7-10, 2018

13.) Learning Multi-graph regularization for SVM classification, V. Mygdalis, A. Tefas, I. Pitas, 2018 IEEE International Conference on Image Processing (ICIP), Athens, Greece, October 7-10, 2018

14.) Quality Preserving Face De-Identification Against Deep CNNs, P. Chriskos, R. Zhelev, V. Mygdalis, I. Pitas 2018 IEEE International Workshop on Machine Learning for Signal Processing (MLSP), Aalborg,

Denmark, September 2018

15.) P. Chriskos, O. Zoidi, A. Tefas, and I. Pitas, “De-identifying facial images using singular value decomposition and projections”, Multimedia Tools and Applications, 2016

16.) Deep Convolutional Feature Histograms for Visual Object Tracking, P. Nousi, A. Tefas, I. Pitas, IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2019

17.) Exploiting multiplex data relationships in Support Vector Machines, V. Mygdalis, A. Tefas, I. Pitas, Pattern Recognition 85, pp. 70-77, 2019

USEFUL LINKS

•Prof. Ioannis Pitas: https://scholar.google.gr/citations?user=lWmGADwAAAAJ&hl=el

•Department of Computer Science, Aristotle University of Thessaloniki (AUTH): https://www.csd.auth.gr/en/

•Laboratory of Artificial Intelligence and Information Analysis: http://www.aiia.csd.auth.gr/

•Thessaloniki: https://wikitravel.org/en/Thessaloniki