Abstract

This lecture overviews Attention and Transformer Networks that is the current state-of-the-art in Neural Networks and has many applications in Natural Language Processing (NLP) and Computer Vision It covers the following topics in detail: Attention mechanisms, Self-attention, Scaled dot-product attention, Multi-head Scaled dot-product attention. Transformer architecture, Bottlenecks of Transformers and Efficient Transformers are also thoroughly presented.

Transformers are compared to RNNs, LSTMs and GRUs.

Transformer Networks have the following applications in natural language processing tasks: machine translation, text summarization, question/answering systems and document generation. Recently, they have been applied in standard computer vision tasks achieving state-of-the-art results, e.g., in image recognition, object detection and image segmentation.

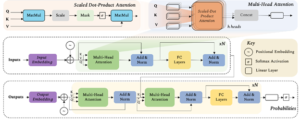

Figure: Typical Transformer architecture.

Attention and Transformer Networks v1.5 summary